From Vision to Main: How I Code With AI Agents Now

The way I write software has fundamentally changed. A year ago, I spent most of my time in the code. Today, I spend most of my time thinking about the code, and the output has never been better.

I call this approach strategic agentic development. The core idea: instead of treating planning and coding as separate phases, I’ve built a system where planning is the primary engineering activity. The agents handle the implementation.

This isn’t theoretical. I’ve packaged everything I’ve learned into an open-source Claude Code plugin called ai-dev. Here’s how it works, and why it’s changed everything.

The Old Way Was Backwards

Traditional software development assumes coding is the bottleneck. You plan just enough to start, then iterate in code. This made sense when writing code was the hard part.

But with AI coding agents, that assumption is inverted. The hard part isn’t writing code anymore. It’s knowing what to write and why.

I kept noticing the same failure mode: I’d ask an agent to build something, it would produce code quickly, and then I’d realize I hadn’t thought through the actual requirements. The agent did exactly what I asked. The problem was what I asked for.

The bottleneck moved upstream, to clarity of thought.

The Compound Engineering Insight

The team at Every articulated something important with their Compound Engineering approach: each unit of engineering work should make subsequent work easier, not harder.

Their plugin implements a cycle: Plan → Work → Review → Compound.

I took this further. If planning is 80% of the value, why not systematize planning itself?

Going Upstream: Strategic Planning as Code

The ai-dev plugin adds a full strategic layer on top of the work cycle. The flow looks like this:

Vision → Strategy → OKRs → Epics → User Stories → Technical Plans → Implementation → Review → Main

Each layer feeds the next. And critically, each artifact lives in your repo. Strategy becomes documentation, not just conversation.

The Kickoff: 8 Phases of Structured Thinking

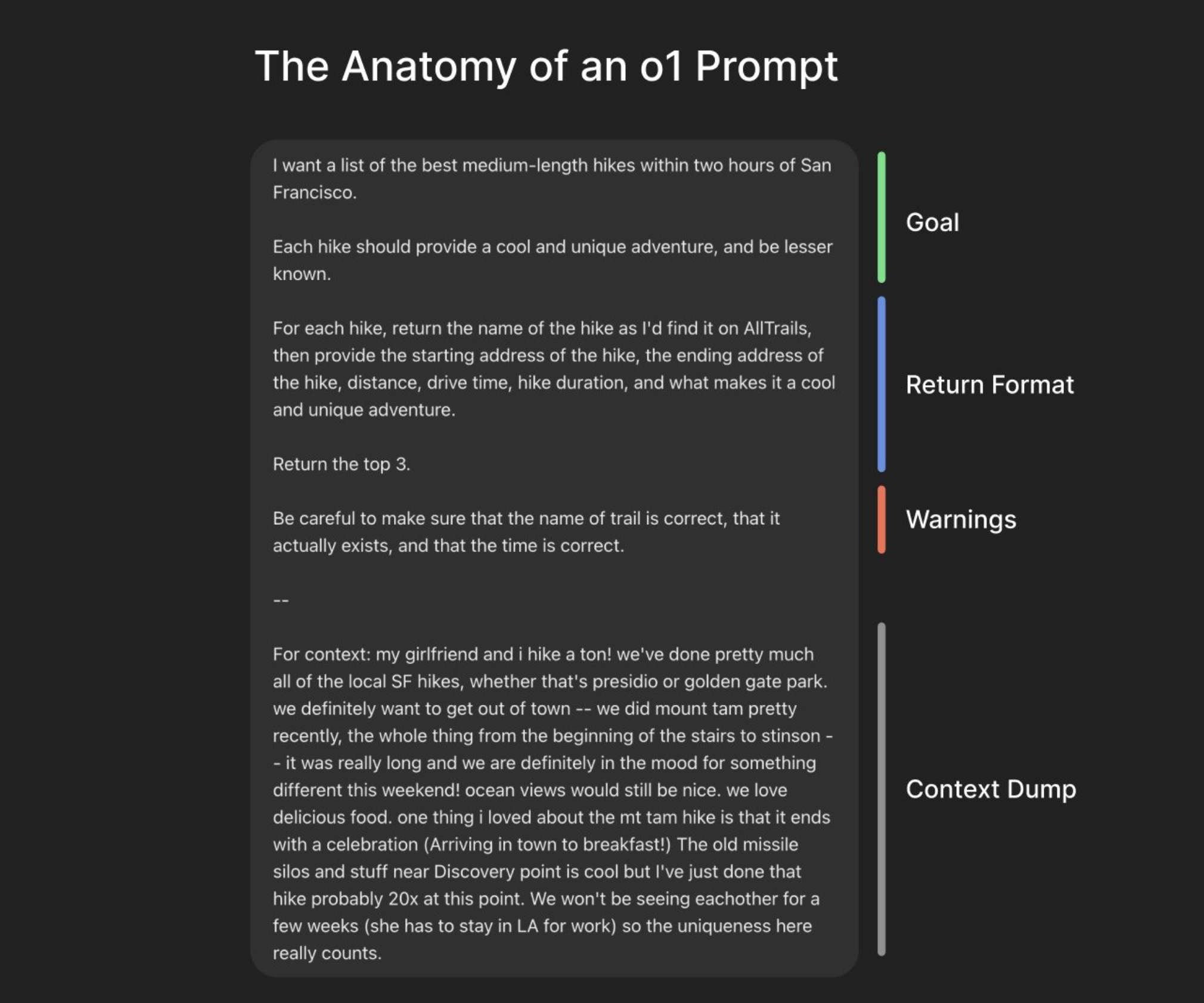

When I start a new project, I run /ai-dev:kickoff. This triggers an 8-phase Socratic planning session:

- Problem Space: What are we actually solving?

- North Star: What’s the ultimate impact we want?

- Vision: What does success look like in 3-5 years?

- Mission: How do we operate? What are our values?

- Strategy & Non-Goals: What we will and won’t do

- Success Metrics: How we measure progress

- OKRs: Quarterly objectives with key results

- Epics & User Stories: The actual work, mapped to GitHub

The output isn’t vague prose. It’s structured markdown in a /strategy/ directory, living documentation that evolves with the project.

The non-goals are particularly powerful. Explicit constraints prevent scope creep before it starts.

From Strategy to Shipped Code

Strategy documents are useless if they don’t connect to execution. Here’s how ai-dev bridges the gap:

GitHub as Single Source of Truth

Epics become GitHub Milestones. User stories become GitHub Issues. Every piece of work traces back to an OKR, which traces back to the strategy.

When I run /ai-dev:plan-issue #123, the agent reads the issue, analyzes the codebase, and produces a detailed technical implementation plan. The plan explicitly references which acceptance criteria it addresses and which OKR it supports.

Trunk-Based Development

All work happens on main. No feature branches. This sounds scary until you realize: with quality gates and small atomic commits, you get more safety, not less.

The /ai-dev:work command executes a plan incrementally:

- Each step tracked with TodoWrite

- Each completed step gets its own commit

- Tests and linting run before anything hits main

If something breaks, you revert one small commit. Compare that to merging a 2-week-old feature branch.

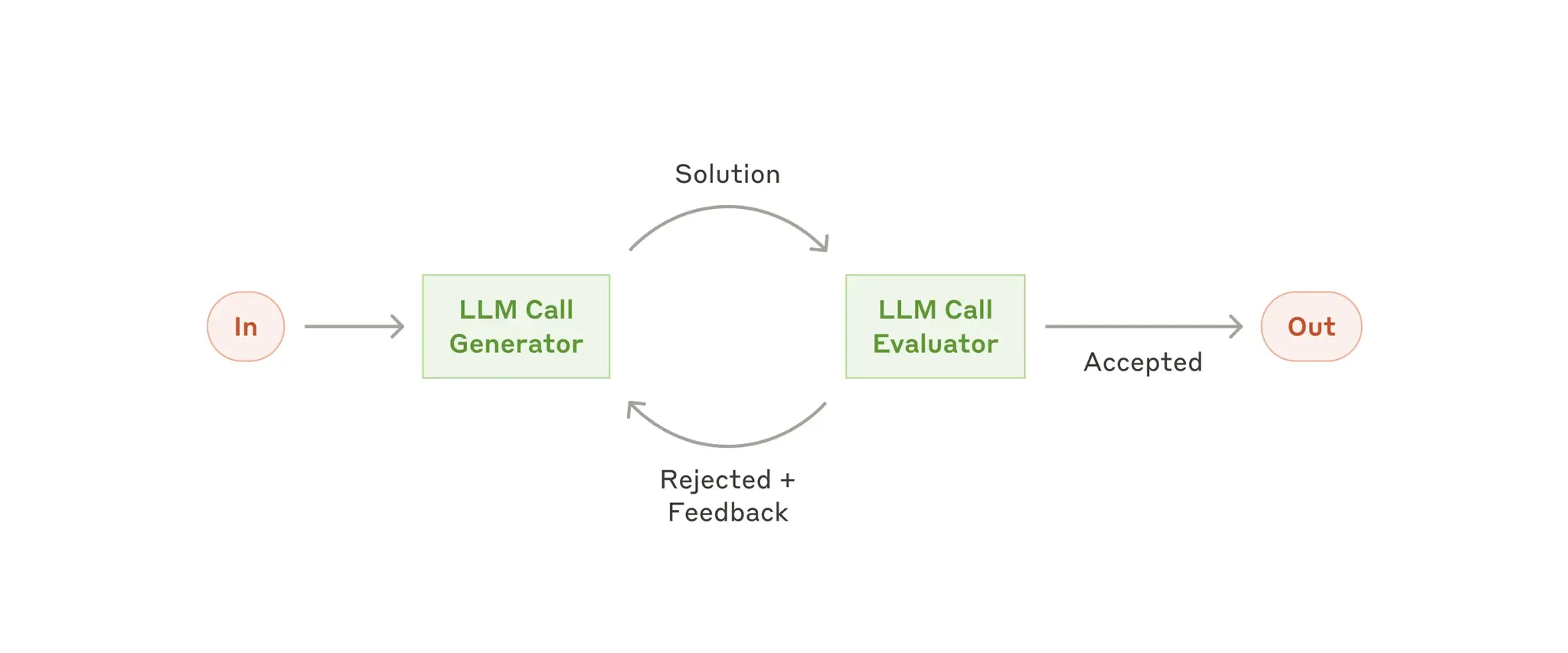

Multi-Agent Review

Before pushing, /ai-dev:review runs three specialized agents in parallel:

- Code Reviewer: Quality and correctness

- Security Auditor: Vulnerability scanning

- Test Architect: Coverage gaps

The findings get synthesized into actionable feedback. Three expert perspectives in seconds.

The 80/20 Flip

Here’s what changed for me:

Before: 20% planning, 80% coding After: 80% planning, 20% reviewing agent output

This isn’t laziness. It’s leverage. The agents can write code faster than I can type. But they can’t decide what to build or why. That’s my job now.

And because planning is captured in structured artifacts, not just my head, the agents get better context with every session. Compound returns.

What This Actually Looks Like

A typical workflow now:

First: Review GitHub issues created from OKRs. Pick one. Run /ai-dev:plan-issue #47 to generate a technical plan.

Next: Review the plan. Refine requirements if needed. Run /ai-dev:work to execute. Watch the agent work through each step, committing as it goes.

Finally: Run /ai-dev:review. Address any findings. Run /ai-dev:commit-push to push to main with quality gates.

If necessary: Update OKRs if needed. Run /ai-dev:sync-strategy to keep GitHub and strategy docs in sync.

The code writes itself. My job is making sure it’s the right code.

Try It Yourself

The ai-dev plugin is open source. Install it in Claude Code and run /ai-dev:kickoff on your next project.

Start with the planning commands even if you’re skeptical. The magic isn’t in the agent automation. It’s in the structured thinking the system forces.

Every unit of strategic clarity makes subsequent engineering easier. That’s the real compound effect.